dP&L/dt: The Calculus of Common Sense

A few days ago, the Economist published a story about how 2.5x as many CEOs are defunding AI initiatives, up to 42% from 17% just a year ago. I've previously written about the 4 stages of the AI hype cycle and we're solidly in the 3rd stage.

Both as an advisor and executive, I've seen this movie before. You don't like how it ends. A few weeks ago, the CEO of a Fortune 500 tech company and I were chatting in his office overlooking the Bay and he was complaining about his GenAI team. "We've invested $50 million, it's been two years and they still haven't moved the needle." The pattern is always the same: massive technical investment, brilliant technical breakthroughs that look great in demos and papers and then… crickets on business impact. Boards get impatient. Talent hemorrhages. Teams get "reorganized" (read: disbanded).

The Problem: Winning at the Wrong Math

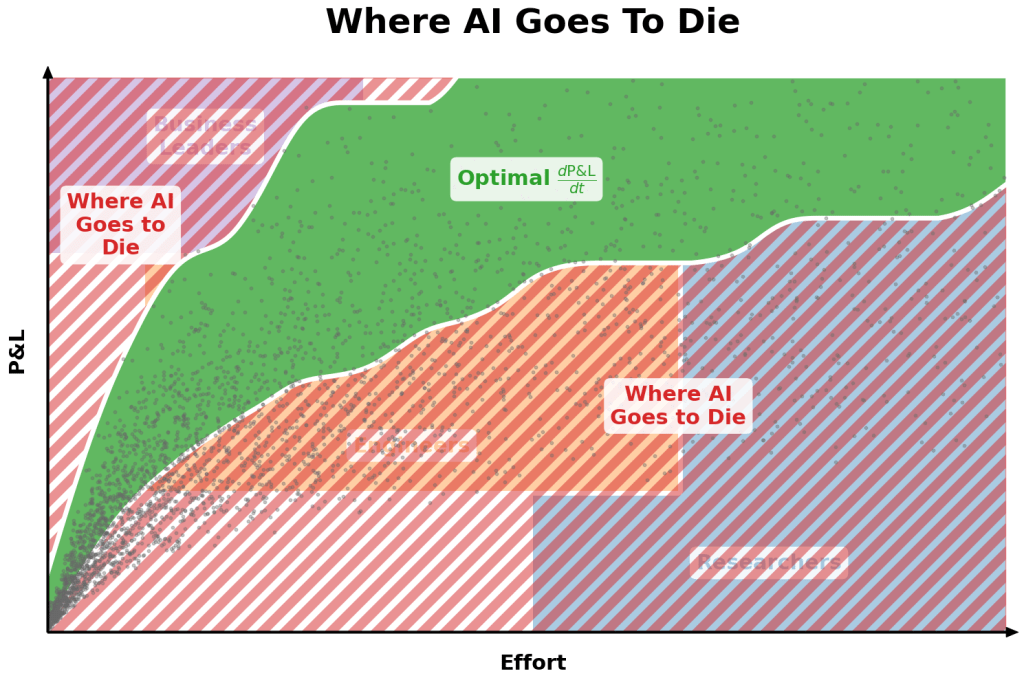

Here's the thing: You're not failing at AI. You're winning at the wrong math. Most AI initiatives fail not because research or engineering or business is hard, but because teams optimize for different loss functions that don't exist in the real world.

Everyone knows what a P&L is. You take revenue, subtract costs, get profit (or loss). There are two ways to improve it: make revenue bigger or costs smaller. But doing that takes effort. Project effort gets decided at the team level. Business teams want big results yesterday. Engineers want to solve today's practical problems. Researchers want to solve tomorrow's grand challenges. These aren't the same. The gap between them is where AI goes to die.

dP&L/dt: A Common-Sensical Loss Function

At Amazon, we taught everyone to work backwards. So, let's apply some common sense and work the problem backwards.

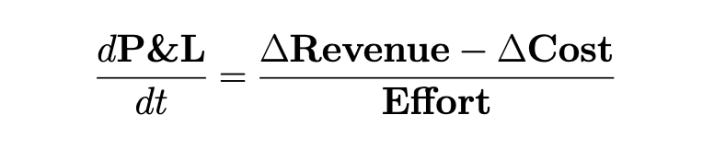

You want to improve the P&L. To do that, you need to make revenue bigger or costs smaller. Doing that takes effort and different things take amounts of different effort. Given that you have finite effort (i.e. your employees), what do you focus on?

Whatever has the highest dP&L/dt!

What is dP&L/dt, you ask? Mechanically, it's just the derivative of the P&L relative to effort, i.e. change in your P&L divided by effort. If you do some math, you can show that if you keep picking projects with the highest dP&L/dt until you run out of effort [*], you'll get the highest P&L impact for your effort. This isn't rocket science but, done right, it's profound.

[* Yes, effort isn't fungible, projects have different risk profiles, balancing dependencies is tricky and 17 other things — I'm simplifying out a bunch of things for now. See below.]

Spiritually, it's organizational DNA — a search and destroy mission. By identifying places with high dP&L/dt and systematically working backwards to break bottlenecks, you not only unleash P&L potential but also build a common vocabulary. By continually doing it over time, you build muscle memory and relationship habits.

Factions: The Root Cause of Failure

Why do common vocabulary and relationship habits matter? Let's break it down another level.

At its root, a common failure mode in many AI organizations today is the divide between research, engineering and business. We see the symptoms everywhere today: skyrocketing AI investments but backtracking on AI promises, revenue churn at AI SaaS startups, lack of AI revenue impact at big companies.

As any seasoned manager (or parent) knows, incentives don't just produce outcomes — they have hundreds of knock-on behavioral effects. When researchers, engineers and business leaders are incentivized for different outcomes, they not only produce different outcomes (duh) but they also make value judgments about other outcomes and break up into factions.

Organizations break down when factions stop talking. Failing communication causes frustrations to fester. Festering frustrations lead to loss of trust, which means people stop even trying to communicate. Thus begins the downward spiral. The research team thinks business is short-sighted. The business team thinks research is building toys. Engineering thinks they're the only ones doing anything "real." When factions emerge, everyone's usually (partially) right. But, ultimately, it doesn't matter who's right because everyone gets fired (read: disbanded).

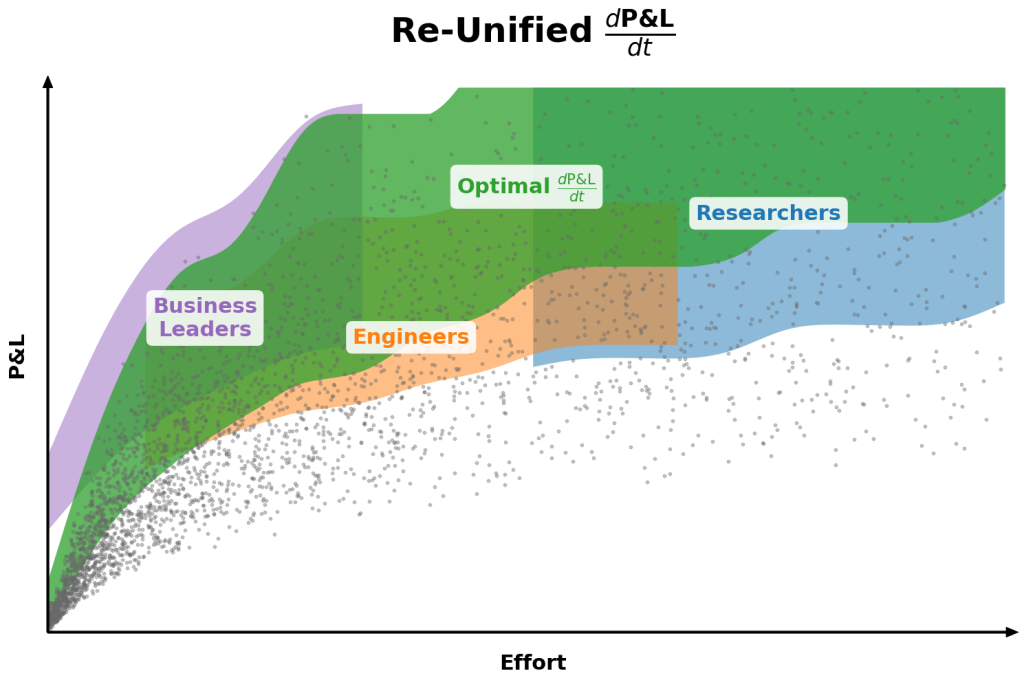

Beyond a metric, what dP&L/dt DNA does is incentivize different factions to think through the same end-to-end system and bottlenecks to drive ultimate impact. Researchers start thinking about customer pain, engineers start incorporating longer-term research strategy, and business leaders start considering technical challenges.

And when you deeply think about someone else's challenges, a funny thing happens — empathy emerges. In one swoop, dP&L/dt DNA solves the revenue problem and breaks down factions, helping teams move from adversarial value judgments to empathetic problem-solving.

What Does This Look Like In Practice?

If you're seeing a discrepancy between technical innovation and business impact, this is highly solvable. I've implemented it many times, from 10-person pizza teams to 50-person startups to large Fortune 500 orgs, both in executive and advisory roles.

What does it look like in practice? As with most management, it's highly contextual but the spirit is the same: integrating the P&L work-back into your organization's day-to-day rhythms and team-level metrics. Your researcher isn't just showing BLEU scores — they're explaining the work-back from customer churn to model explainability to the tradeoffs for different mechanistic interpretability techniques. Your product manager isn't asking for "better accuracy" — they're quantifying exactly how much customer lifetime value each percentage point unlocks. Your executive team isn't just saying "AI is strategic" — they're debating which directions unlock the richest areas of dP&L/dt under different forward scenarios.

To be sure, there are sharp edges and judgment matters. Short-term dP&L/dt vs. long-term dP&L/dt portfolio mix. Non-fungible effort — your star researcher can't just be reassigned to close deals. Correlated risks — putting all your dP&L/dt eggs in one basket. Platform effects where today's investment creates tomorrow's dP&L/dt multipliers (d²P&L/dt² for the nerdy).

Like many things management, dP&L/dt is 40% science, 60% art — and 100% doable.

Hot Take: The Ultimate Winners

Here's my hot take: The companies that win this AI cycle won't have the smartest researchers or biggest budgets — they'll be the ones where everyone executes with dP&L/dt DNA.

Historically, some of technology's breakthroughs have came from vertically-integrated teams with unified objectives. SkunkWorks' SR-71, AWS' two-pizza teams, and DeepSeek's efficiency play — they all succeeded because they were small, multi-functional teams where everyone ruthlessly optimized against a single mission. The converse proves the point: Google invented transformers and had web-scale data, but lost to OpenAI out of the gate because their research, engineering and product teams were fragmented; only as it's reorganized into vertically-integrated units has Google been begun catching up. During rapid change, horizontal coordination breaks down.

The next AI winners won't be silo'd research divisions or traditional enterprises bolting on AI — they'll be companies that (re-)build themselves as vertically-integrated dP&L/dt machines, where AI teams sit next to customer success, where engineering works directly with research, where business strategy and technical architecture are co-designed together.

> dP&L/dt is 40% science, 60% art — and 100% doable.

When teaching this concept I frequently use the term "bets". During planning, some tasks we know they will pay off with a nice return, but everything else is a bet, maybe on time or return. By calling it a bet engineers were much more okay pulling the plug on bets that wouldn't pay out after learning more data. It also gave us a nice set of vocabulary to use. What might be the expected return, how can we improve our odds, etc.

dP&L/dt also lets me go after project after project that move this number. As long as I improve this month after month this is how I have built the mythical 10X teams. Some fun examples: Outside pure tech I could justify improving retention to improve the overall value by reducing onboarding costs. I took on a small project of two weeks of work to delay a migration by two years to get higher return on capital costs. My personal favorite is simply by increasing release speed revenue on capital costs can be returned faster. A project to simply expose costs saved untold amount of spending from teams who immediately noticed new big expenses that otherwise would have accumulated. Having a deep understanding of finance, manufacturing, and software engineering I do a lot of teaching to engineers, but the payoffs can be immense.

dP&L/dt I have found is cultural and pivot to it can be hard, but instead new teams/orgs/companies can be built with it at the core. While tempting to do a startup, I am sure there is a company out there that does it, just need to find them.