Prompt Engineering is the New JavaScript

Every generation, programming reinvents itself through a new abstraction layer, upending the technology ecosystem in large but predictable ways.

In the 1970s, it was assembly → C. In the 1990s, it was C → Java. In the 2000s, it was Java → Python, Ruby & JavaScript. Now, it’s prompting.

What do I mean? Well, you can write 10,000 lines of C that can be compiled to 1,000,000 lines of assembly. You can also write 100 lines of Python, Ruby and JavaScript that can be interpreted to 10,000 lines of C (and 1,000,000 lines of assembly). And now, imagine your favorite LLM as a compiler: you can write 1 line of prompt that can be converted to all of the above too. This is what programmers call abstraction.

When revolutionary abstractions emerge — from Java's cross-platform usability to AWS' cloud infrastructure to Objective-C's mobile SDKs — a predictable cascade of transformations follow. Accessibility explodes as barriers fall. New failure modes replace old ones: buffer overflows give way to dependency hell, multi-threaded UI race conditions and network partitions. Engineering culture and organizations shift from individual proofs to collaborative, iterative problem-solving. Economics transform as middleware commoditizes as infrastructure and application layers gain value. This pattern of abstraction shifts and cascading changes has repeated throughout computing history: assembly to C (1970s), C to Java (1990s), interpreted languages (2000s), and cloud/mobile (2010s). Now, we're about to witness the next stage in real-time.

Taking the long view, prompt engineering is the next stage in a long, inevitable series of abstraction shifts and cascading transformations. It's neither a passing fad nor a once-in-a-lifetime revolution. If you dismiss it, you’ll be left behind, just like the “scripting languages aren’t real languages” programmers of the 2000s. If you work yourself into a frenzy, you’ll miss the real opportunities because you’re too tired or distracted.

As a kid, I learned to surf on the beaches of Los Angeles. Anyone who’s surfed can tell you how humbling it is to try to paddle out the first few times and come up snorting saltwater. I learned an important lesson that summer: You can’t fight the ocean but, if you figure out how it behaves, you can learn to duck under the wave and surf the next one to glory.

Three Causal Mechanisms

So how do abstraction shifts behave? As one of my favorite mentors says, “just stare out the window for a few minutes.” The world isn’t random; there are some basic physics to how it all works.

I claim three basic mechanisms explain a bunch of things about abstraction shifts:

The Accessibility Flywheel: With lower technical barriers, more people can build more things; with new economies of scale, others build better tools that lower barriers even further.

The Complexity Paradox: Each new abstraction simplifies development by hiding historical complexity, but creates newer and harder sources of complexity (read: bugs) at higher levels of abstraction.

The Value Barbell: As the meat-and-potatoes work of yesteryear gets commoditized away, economic value gets pushed to the extremes: low-level system builders and high-level domain applicators.

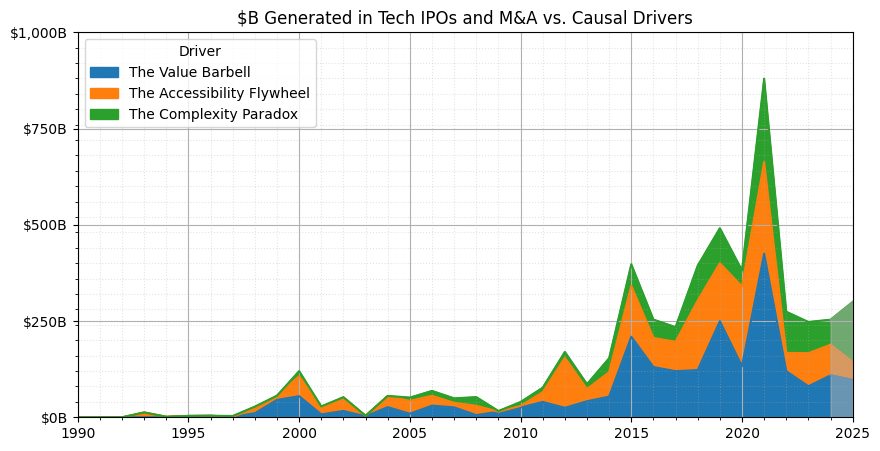

If you work out the math (and make some assumptions), it turns out the Value Barbell explains 32% of the value created from M&A and IPOs in the past 45 years, the Accessibility Flywheel explains 27% and the Complexity Paradox explains 15%. More on that in a minute.

Does This Actually Work?

Let’s be honest, this is just a hypothesis. Everyone and their mother has a hypothesis for AI right now. Good hypotheses should at least be accurate — can it pass a backtest? Let’s consider a few examples:

Remember when two founders in a garage became the Silicon Valley archetype? Y Combinator launched in 2005, but Ruby on Rails launched in 2004. It wasn't magic — it was the Accessibility Flywheel in action. Suddenly, developers could launch MVPs in weeks not months, with teams of 3 not 30.

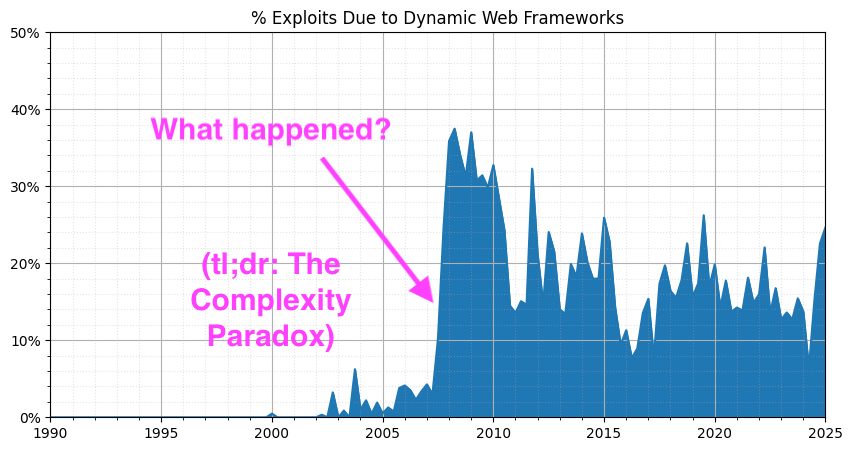

But for every action, there's an equal and opposite reaction. Alongside this democratization came a predictable explosion of XSS vulnerabilities, CSRF attacks, and SQL injections, AKA the Complexity Paradox, as we traded yesterday's bugs for tomorrow's. I downloaded all reported NIST exploits over the past 35+ years, cross-referenced assigned root causes related to dynamic web frameworks and calculated the percentage — just look at the step change:

The mobile revolution tells the same story. Before iOS, developing mobile applications required arcane knowledge of embedded systems, cryptic SDKs, and carrier relationships that kept innovation locked behind practical and economic barriers. When location services became accessible through simple SDKs, we didn't just get better maps — we got entirely new categories of businesses. Uber, Pokémon GO, and countless others emerged from that abstraction shift, creating a $20+ billion market in a decade.

But it's not just about moving up. While WhatsApp built an app serving 900 million people with only 50 engineers, NVIDIA created enormous value at the lowest abstraction levels. Their stock has risen 17x in the last five years by understanding that as middle-layer tasks get commoditized, exceptional value emerges at both extremes. They saw that as AI abstractions simplified development, whoever built the specialized silicon foundations would become indispensable infrastructure — capturing massive value across an entire technological revolution. In a nutshell: The Value Barbell.

Downstream, as value, difficulty and people evolve, so do culture and organizations. When I started at Amazon in the early 2000s, the entire company ran on a single C++ monolith called Obidos. Back then, bi-weekly release cycles on 50+ million lines of code caused ~1000 engineers to operate in lockstep — God help you if you broke the build. A decade later, with microservices and cloud infrastructure, small, fast-moving, autonomous teams could build and launch (and break and fix) functionality without talking to anyone else.

There are dozens of other examples: enterprise apps going 70% low-code; US engineers tripling; microservices reaching 85% of big companies; left-pad breaking the Internet; cloud spending exploding to $595 billion; Apple paying app developers $300+ billion; Tesla vertically integrating from chips to self-driving algorithms. Each showcases the same pattern — as we abstract away yesterday's complexity, we unlock more builders, create new kinds of bugs and push economic value to the extremes.

This isn't random — it's physics. When you abstract away today’s challenges, you necessarily create new failure modes, new audiences and new economic winners and losers. The same forces that transformed programming from assembly to JavaScript are now transforming it from JavaScript to prompts.

What Comes Next?

“OK, let’s say I believe you. What does this mean?” A useful hypothesis makes useful predictions. If you play out the above to their logical conclusions, a few things emerge. Let’s consider the Complexity Paradox and the Value Barbell first:

In an AI-augmented world, what do tomorrow's bugs look like? Modern LLMs are designed to mimic (and please) humans, suggesting future systems may inherit human cognitive errors. Imagine an AI zero-day that exploits reciprocity bias — tricking your AI customer service agent into issuing a $1000 voucher because a scammer was "nice" to it first. You can vet standard code for SQL injection vulnerabilities, but how do you vet AI code for psychological vulnerabilities?

Will we accept distributional failure in AI-driven businesses? Mostly, traditional software produces binary outcomes — it either works or doesn’t. AI systems produce distributional outcomes and have irreducible uncertainty in any single case. Will businesses and users accept this? My bet: human psychology says no. We punish negative outcomes disproportionately, even when average performance improves. This mismatch between technical reality and human expectations will drive a wave of innovation in AI safety, testing and containment. Companies that solve the “reliability gap” will win.

When you work out the math, it turns out the Value Barbell is the #1 driver of technology value via M&A and IPOs in the past 45 years (32% of all value), but the Complexity Paradox is the fastest-growing driver, growing from 5% to 20% of tech valuations in the past 25 years. Check out the below — I just downloaded all US tech IPOs and acquisitions >$1B from Crunchbase, asked Claude to categorize everything and then plotted it.

While the Accessibility Flywheel is a solid, steady driver of shareholder value over the decades, my guess is its impact is felt more organizationally and socially.

Who’ll be the 10x engineers of 2030? Probably not today's JavaScript wizards. The skill ceiling will rise dramatically while the floor drops to near zero. We're already seeing signs of the new elite: people who can architect complex request-response chains, prompt ensembles, and system-level AI interactions — people who are part psychologist, part philosopher, part systems architect. My bet is that it’ll be the folks who can personally embody the Value Barbell, simultaneously thinking about low-level system constraints and high-level LLM psychology.

How will organizations resolve the tension between democratized development and centralized control? If everyone writes code, is everyone an engineer? (And does everyone report to the CTO?) No. Like data teams today, AI will likely end up following a hybrid model: centralized platforms (under CTOs), centralized risk management (under CISOs), and federated AI-enabled operations throughout the company. As more code gets written by more AI-enabled teams, my guess is that technology organizations will look very different than today.

Who Cares?

We're all just going to get eaten by the singularity anyways. Why does it matter?

I joke that 80% of my job reduces to "I've seen this movie before — I don't think you'll like how it ends, you should do something different." In the past year, I've probably talked to hundreds of technology executives, startup founders and investors. Many fall into two camps:

"Move along, nothing to see here." Dismissing it as just another passing fad, they’re trying to focus on next quarter’s P&L. Let’s name it: they’re the IBMs of today.

"OMG, the train is leaving, EVERYONE LET'S GO!" Frenzied by FOMO, these leaders are throwing money at anything with "AI" in the pitch deck. They're the WeWorks of today.

We've invested and committed $1 trillion dollars into modern AI, e.g. Stargate, GenAI startups. Power laws being power laws, most of that won’t generate a profit (but some of it will probably generate a lot). We’re not in Kansas anymore, Toto.

I claim there’s a third path: strategic patience + leveraged action. Identify where complexity will migrate next; get there first. Red-team how the Value Barbell might upend your industry; disrupt yourself. Find your organizational points of leverage and kickstart the Accessibility Flywheel. Some teams might need to guard against psychological vulnerabilities of AI systems, whereas others might need to look for disruptive

The question today isn't if modern AI or prompting will transform the technology ecosystem — it will; the tide is coming in and the ocean always wins. Just as interpreted languages, mobile SDKs and cloud infrastructure transformed the technology ecosystem, so will prompting. The fundamental physics of abstraction shifts and cascading transformations guarantee it.

Instead, the question is how will you approach the ocean? Ignore it, you'll get hammered by a wave and end up with your face in the sand snorting saltwater. Jump in at the wrong place (or wrong time), the waves will drag you against the corals and you’ll end up snorting even more saltwater. Or... just hang back for a beat, learn the ocean’s rhythms, strategically dive in (and under) a wave and then ride the next one to glory.

I don't know if there'll be a singularity or not in the next N years or what p(doom) is. What I do know is if there is a singularity, ignoring one of the most disruptive forces we’ve seen in a generation (or not taking a few minutes to properly understand it) isn’t a recipe for success for you, your family or your team.

I don't have a crystal ball — anyone who says they do is lying — but I do think reality follows some basic physics. Data, people, organizations behave in (mostly) predictable ways. While the past is no guarantee of future performance, it is the best predictor of future performance — ignoring it is a good way to end up with a mouthful of sand and a noseful of saltwater.

Hang five, my friend.